最近线上的一个项目遇到了内存泄露的问题,查了heap之后,发现 http包的 dialConn函数竟然占了内存使用的大头,这个有点懵逼了,后面在网上查询资料的时候无意间发现一句话

10次内存泄露,有9次是goroutine泄露。

结果发现,正是我认为的不可能的goroutine泄露导致了这次的内存泄露,而goroutine泄露的原因就是 没有调用 response.Body.Close()

实验

既然发现是 response.Body.Close() 惹的祸,那就做个实验证实一下

不close response.Body

1 | func main() { |

##close response.Body

1 | func main() { |

结果

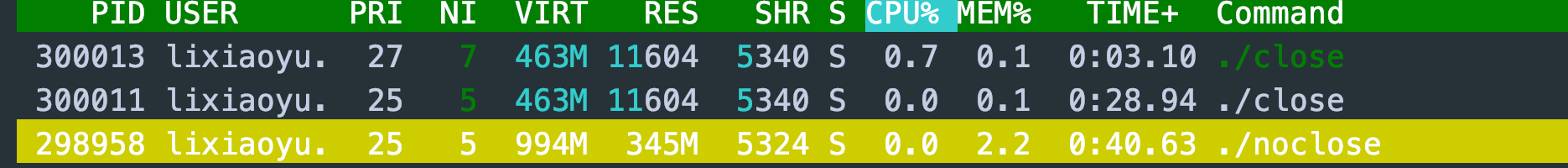

同样的代码,区别只有 是否resp.Body.Close() 是否被调用,我们运行一段时间后,发现内存差距如此之大

后面,我们就带着问题,深入一下Http包的底层实现来找出具体原因

结构体

只分析我们可能用会用到的

Transport

1 | type Transport struct { |

pconnect

1 | type persistConn struct { |

writeRequest

1 | type writeRequest struct { |

##requestAndChan

1 | type requestAndChan struct { |

请求流程

这里的函数没有太多的逻辑,贴出来主要是为了追踪过程

这里用一个简单的例子表示

1 | func main() { |

client.Get

1 | var DefaultClient = &Client{} |

1 | func (c *Client) Get(url string) (resp *Response, err error) { |

client.do

1 | func (c *Client) do(req *Request) (retres *Response, reterr error) { |

client.send

1 | func (c *Client) send(req *Request, deadline time.Time) (resp *Response, didTimeout func() bool, err error) { |

send

1 | func send(ireq *Request, rt RoundTripper, deadline time.Time) (resp *Response, didTimeout func() bool, err error) { |

Transport.roundTrip

这里开始接近重点区域了

这个函数主要就是湖区连接,然后获取response返回

1 | func (t *Transport) roundTrip(req *Request) (*Response, error) { |

接下来,进入重点分析了 getConn persistConn.roundTrip Transport.dialConn 以及内存泄露的罪魁祸首 persistConn.readLoop persistConn.writeLoop

Transport.getConn

这个方法根据connectMethod,也就是 schema和addr(忽略proxy代理),复用连接或者创建一个新的连接,同时开启了两个goroutine,分别 读取response 和 写request

1 | func (t *Transport) getConn(treq *transportRequest, cm connectMethod) (*persistConn, error) { |

上面的 handlePendingDial 方法中,调用了 putOrCloseIdleConn,这个方法到底干了什么,跟 idleConnCh 和 idleConn 有什么关系?

Transport.putOrCloseIdleConn

1 | func (t *Transport) putOrCloseIdleConn(pconn *persistConn) { |

Transport.tryPutIdleConn

1 | func (t *Transport) tryPutIdleConn(pconn *persistConn) error { |

Transport.dialConn

跑偏了一会,现在接着 getConn分析 dialConn 这个函数

这个函数主要就是创建了一个 连接,然后 创建了两个goroutine,分别去往这个连接写入请求(writeLoop函数)和读取响应(readLoop函数)

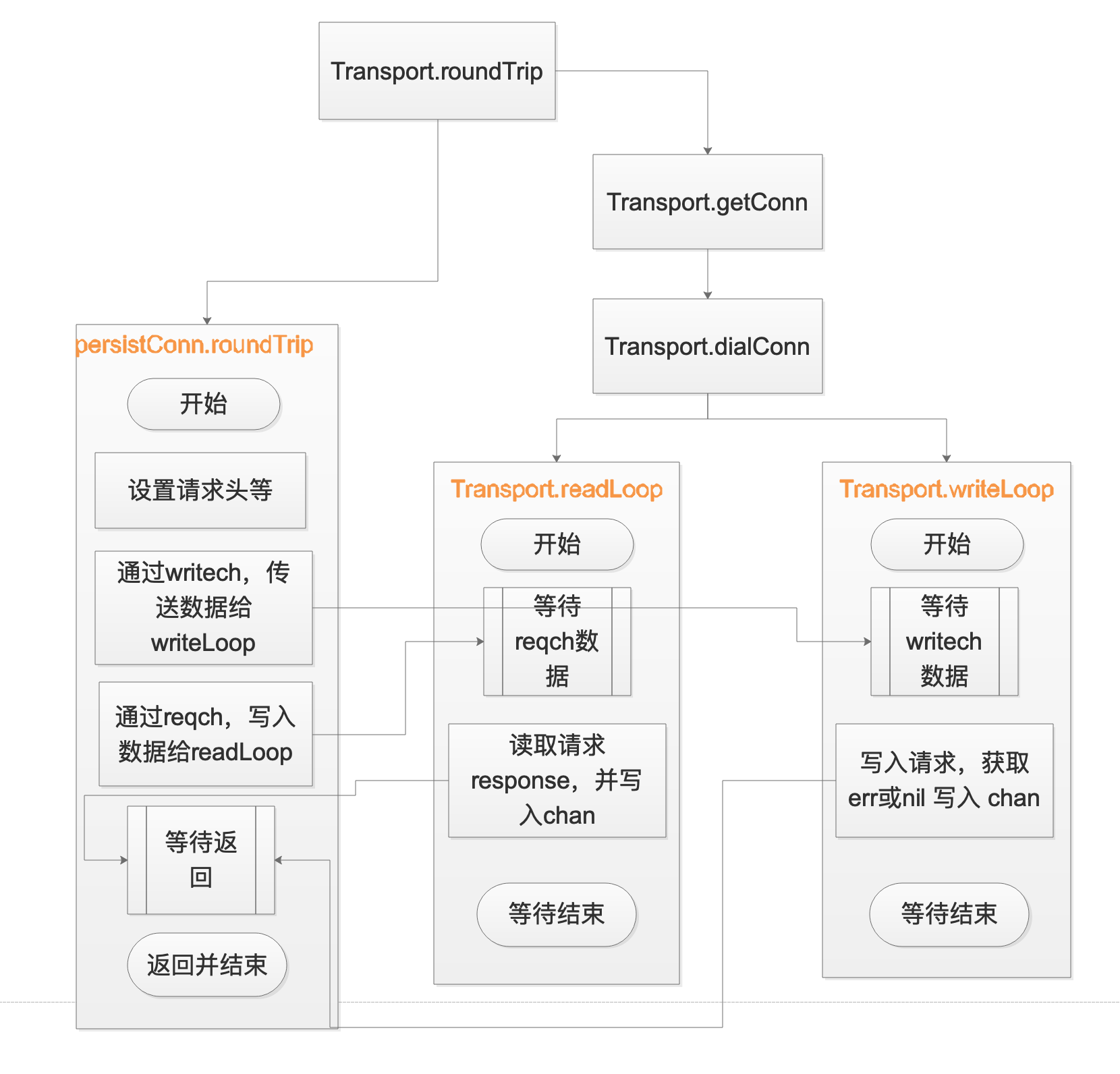

而这两个函数,又会与 persistConn.roundTrip 通过chan进行关联,这里先对函数进行分析,分析完成后,再画出对应的关联图示

1 | func (t *Transport) dialConn(ctx context.Context, cm connectMethod) (*persistConn, error) { |

persistConn.readLoop

readLoop 这里从连接中读取 response,然后通过chan发送给persistConn.roundTrip,最后等待结束

1 | func (pc *persistConn) readLoop() { |

persistConn.writeLoop

相对于persistConn.readLoop, 这个函数就简单很多,其主要功能也就是往连接里面写request请求

1 | func (pc *persistConn) writeLoop() { |

persistConn.roundTrip

无论是 persistConn.readLoop 还是 persistConn.writeLoop 都避免不了和这个函数交互,这个函数的重要性也就不言而喻了

但是 这个函数的主要逻辑就是 创建个连接的 writeRequest chan, 也就是 writeLoop 用到的chan,然后把request 通过这个 chan 传给 persistConn.writeLoop ,然后 在创建一个 responseAndError chan,也就是 readLoop 用到的chan,从 这个chan中获取 persistConn.readLoop 获取到的 response,最后把 response返回给上层函数

1 | func (pc *persistConn) roundTrip(req *transportRequest) (resp *Response, err error) { |

交互图示

上面 persistConn.roundTrip persistConn.readLoop persistConn.writeLoop 之间的数据交互,可能靠语言比较苍白,这里画一下图示

小结

综上分析,可以发现,readLoop和 writeLoop 两个goroutine在 写入请求并获取response返回后,并没有跳出for循环,而继续阻塞在 下一次for循环的select 语句里面,所以,两个函数所在的goroutine并没有运行结束,导致了最开的现象: goroutine持续增加导致内存持续增加

Close流程

close

close的主要逻辑是通过调用 readLoop 的第89行定义的earlyCloseFn 方法, 向 waitForBodyRead 的chan写入false,进而让 readLoop 退出阻塞,终止 readLoop 的 goroutine

readLoop 退出的时候,关闭 closech chan,进而让 writeLoop 退出阻塞,终止 writeLoop 的goroutine

1 | func (es *bodyEOFSignal) Close() error { |

##earlyCloseFn

定义的 earlyCloseFn 方法

1 | body := &bodyEOFSignal{ |

结束readLoop

回过头来看 readLoop 阻塞的代码

1 | select { |

当 readLoop 退出的时候,调用函数最开始定义的 defer 函数

1 | defer func() { |

结束writeLoop

继续看一下 writeLoop 阻塞的代码

1 | select { |